This is a major breakthrough in character consistency for AI videos. Kling AI released “Custom Models”. You can now train your own video for superior character consistency.

Overview

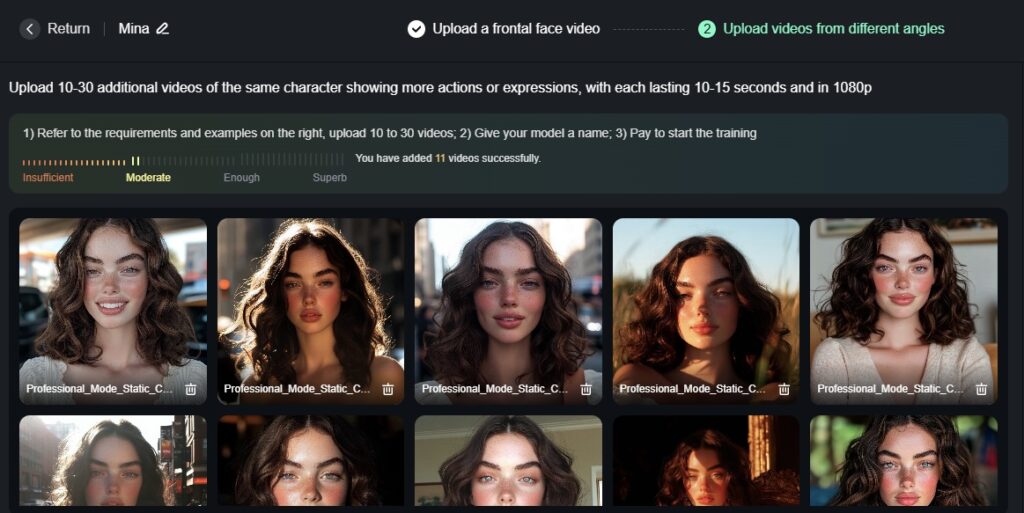

The custom video model allows you to train a consistent video character, based on 10–30 videos, with each video being at least 10 seconds long. Usually, you would train an image model on images, but here, we use videos to train a video model.

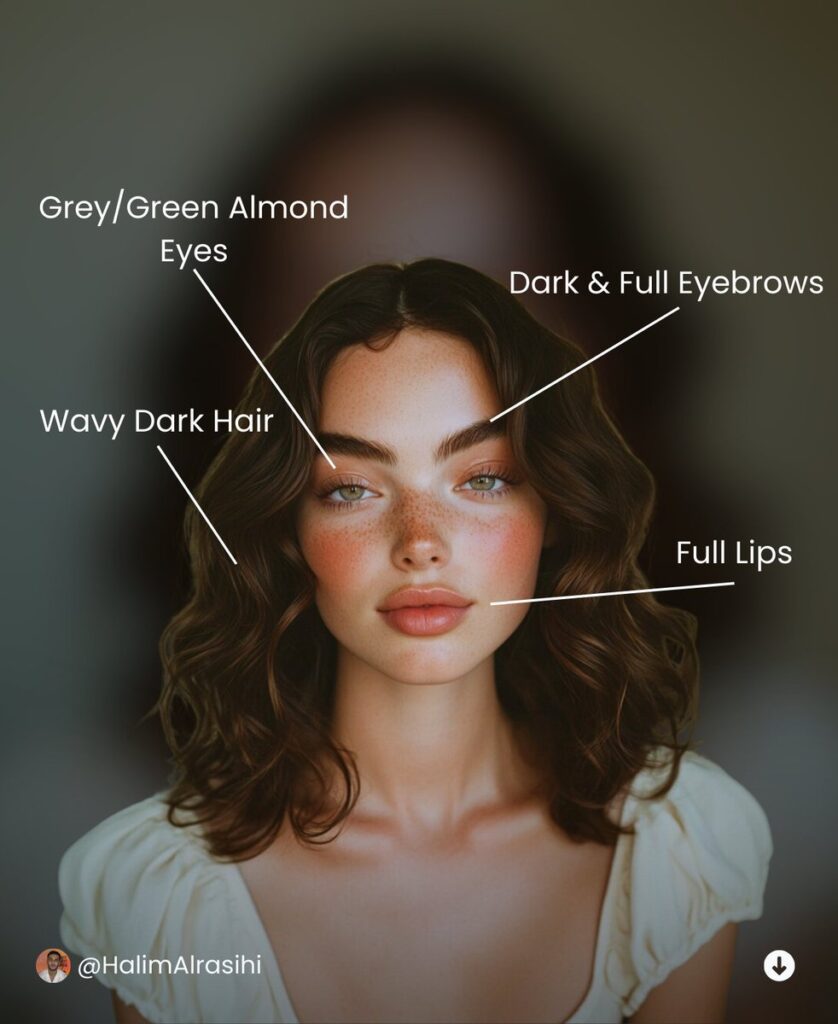

Kling AI’s custom model captures all the important features of the character in your video. Before we start the training, you need to create a character or use an existing one. I generated mine in Midjourney and continued to create more images with the character reference.

Next, upload images of your character to Kling AI and create 10 videos where the character’s face is the focus. Use different backgrounds, expressions and activities, but keeping it simple worked really well for me.

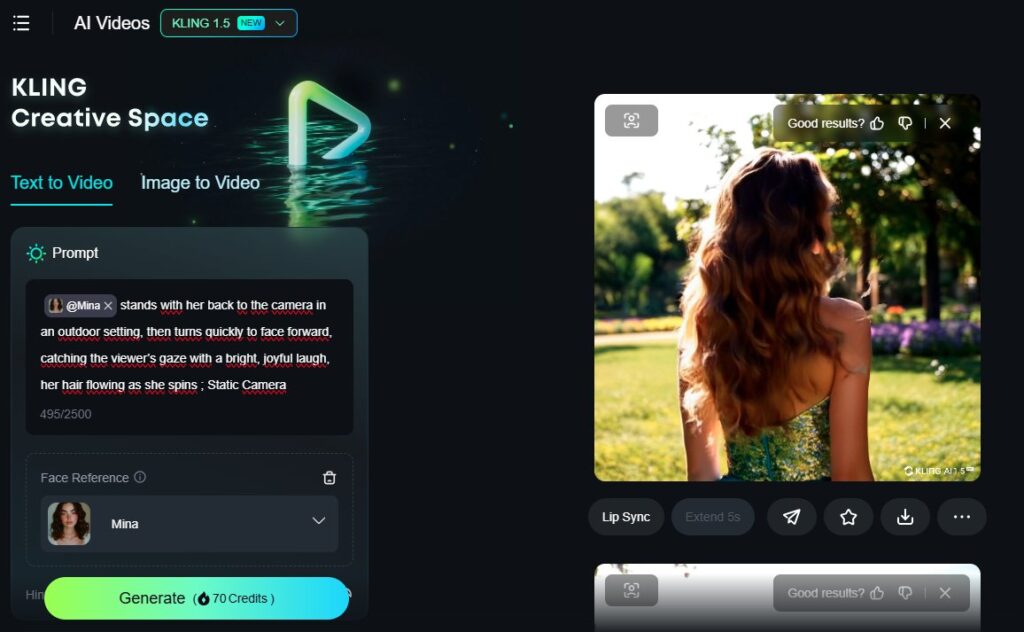

Submit and train your model! Now you can simply just tag your model in your text-to-video prompt and describe your scene.

Here’s the most important test: a turnaround!

Prompt:

[@ character] stands with her back to the camera in an outdoor setting, then turns quickly to face forward, catching the viewer’s gaze with a bright, joyful laugh, her hair flowing as she spins.

Result:

ou can be really creative with Kling AI’s new custom model. Use camera movements and actions in your prompt to reveal your character’s face after a brief moment, rather than showing it immediately.

The scene opens with a close-up of her hand adjusting an intricate necklace, her face just out of frame. The camera tilts up slowly, until her face finally comes into view, framed by dim, flickering candlelight.

Author: @HalimAlrasihi

Rea related articles in our Blog: