Lip sync is now available in Kling AI, making it easier than ever to synchronize audio with video. In this article, we’ll cover everything you need to know about the lip sync feature, including how to use it, tips for achieving the best results, and potential limitations to be aware of.

Setting Up Lip Sync in Kling AI

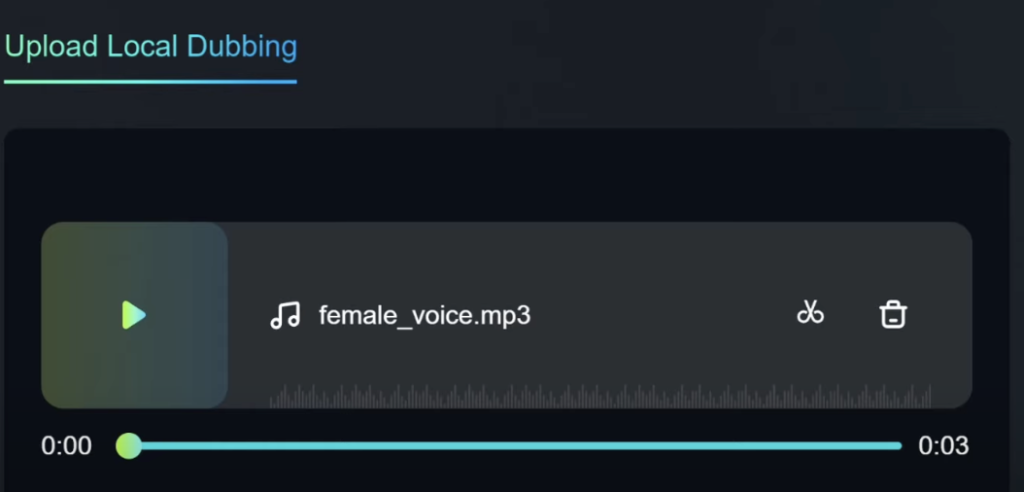

To start using the lip sync feature in Kling AI, log into the AI video interface. You’ll need to upload an audio file and select a base video for the AI to work with.

The best results come from using a close-up shot of someone’s face, with their lips clearly visible. For example, you can create a video using an image generated through tools like MidJourney and convert it into a speaking video.

Once you have your base video ready, click the “Match Mouth” button to initiate the lip sync process.

The AI will analyze the video and prepare it for synchronization. After that, you can upload your audio file. If the audio is longer than the video, Kling AI allows you to crop it to fit the video’s duration, though it’s best to use a shorter audio file to avoid this step.

Processing and Adjusting Lip Sync

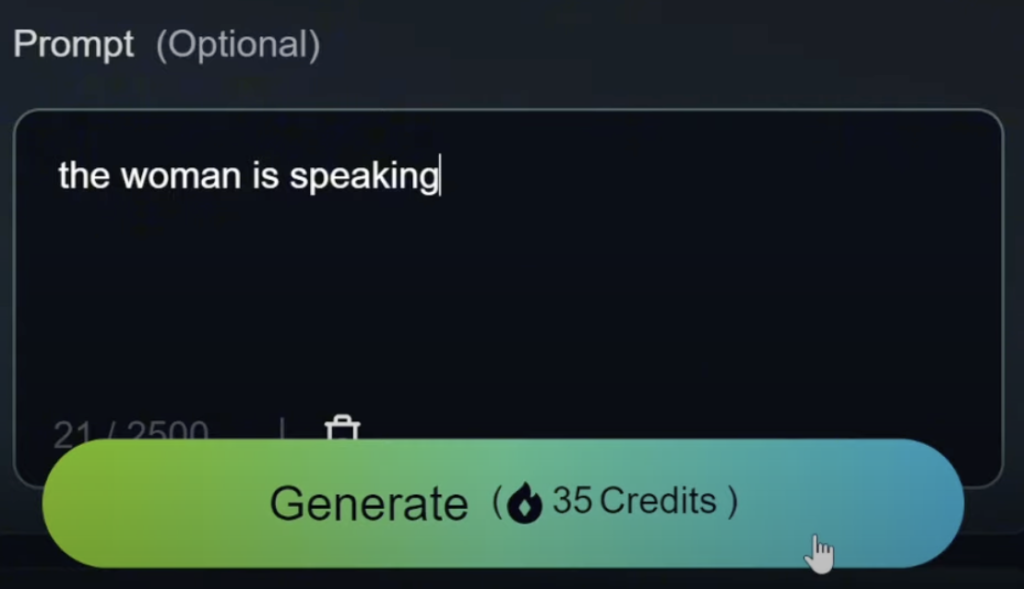

After uploading the audio, hit the lip sync button to start the synchronization process. The AI will begin processing, which might take up to 10 minutes, but often finishes sooner, around 5 minutes. The results are typically quite realistic, with crisp and natural-looking lip movements.

However, you might notice slight blurring around the lips and teeth. This minor detail can make it possible to detect that the video is AI-generated, but only upon close inspection. If you aren’t satisfied with the outcome or wish to try a different audio file, use the “Redub” button to upload a new audio and try again.

Using Lip Sync with Different Animation Styles

Kling AI’s lip sync feature is versatile, working well with various animation styles. It excels in 3D or photo-realistic animations, especially when the character’s head remains mostly static or moves subtly. The AI can synchronize lip movements even when the character’s head shifts slightly, which is particularly useful for more dynamic scenes.

However, for anime-style videos or characters with exaggerated movements, the results may not be as smooth. The synchronization between lip movements and audio can become less accurate, leading to choppy animations. To improve the results, try using prompts that describe minimal movement, like “subtle motion” or “static camera.”

Limitations and Special Considerations

While Kling AI’s lip sync is powerful, it has some limitations. It performs best with humanoid faces and may struggle with non-human or hybrid characters. For example, a 3D water elemental animation or a hybrid dog-human model might result in errors if the AI cannot detect a consistent face in the video.

Another limitation is the inability to choose which face the AI synchronizes with when there are multiple characters in the video. If there are multiple faces, the software will automatically select one to sync with the audio. Unfortunately, this means you cannot manually assign a voice to a specific character, which may lead to mismatched voice assignments.

Demo

Watch detailed video instruction below:

Conclusion

The lip sync feature in Kling AI is a valuable tool for creating videos with synchronized audio, particularly for close-up and 3D animations. While it has some limitations, such as difficulty with non-humanoid faces and limited control over multi-character videos, it remains a convenient option for most projects. With a little experimentation, you can create impressive, realistic-looking videos without needing external software.

Read related articles: